It's really important when you're recording digital audio, so that the recording digital sink is kept rigidly in sync with the transmitting digital source. Over digital links like SP/DIF, hardware devices can both transmit and receive a signal in addition to the audio data which is used to perform this kind of synchronisation.

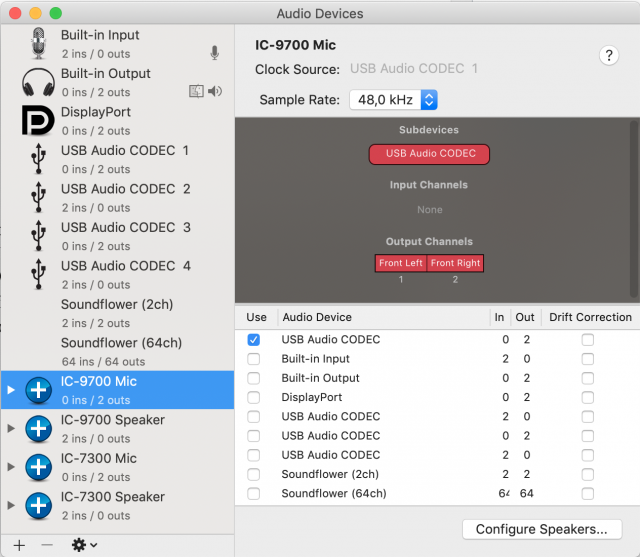

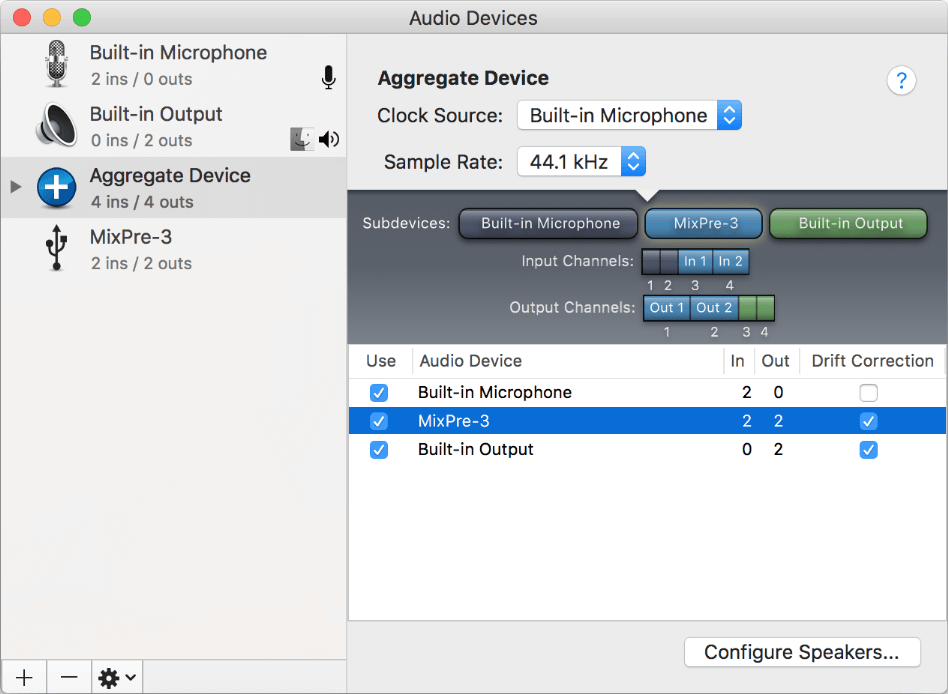

#AGGREGATE DEVICE FOR USB MIDI MAC TV#

But the computer is sending digital audio over HDMI to the TV which independently decodes it - there needs to be some way to make sure both the computer and the TV decode at the same rate, without any clock drift over time screwing things up. So you use Audio MIDI Setup to add an aggregate device for both your computer and the TV. So for example, you might connect a TV via HDMI and use it as a second monitor to watch movies, but you might want both the TV speakers and your computer speakers to be used - maybe you've plugged in computer speakers with a subwoofer and you like the added bass that the TV speakers can't produce. If these clocks get out of sync, the audio would drift out of sync too and eventually one or more of the hardware devices would start exhausting its data buffer before others had finished with theirs.

Even if these devices are running at the same sample rate, they're probably all using independent hardware clocks to send buffered audio through their DACs and actually generate sound.

When you create an aggregate device, more than one piece of sound generation hardware may be required to operate concurrently. It's to do with keeping different hardware devices in sync. (This is answering an old question, but since I was researching the topic.)

0 kommentar(er)

0 kommentar(er)